Avatar 3.0/Expressions: Difference between revisions

No edit summary |

No edit summary |

||

| Line 53: | Line 53: | ||

=== Making an Animation === | === Making an Animation === | ||

[[File:Animation.png|thumb|An example animation (messes with a blendshape)]] | |||

# Ensure your Animation window/tab is visible. (Window > Animation > Animation or CTRL+6) | # Ensure your Animation window/tab is visible. (Window > Animation > Animation or CTRL+6) | ||

#* I recommend dragging the tab next to your console tab to reduce clutter. | #* I recommend dragging the tab next to your console tab to reduce clutter. | ||

| Line 69: | Line 69: | ||

=== Creating your Controller === | === Creating your Controller === | ||

[[File:Example Animator Controller.png|thumb|Our first animator controller, before we mess with anything.]] | |||

# Create a folder for your controller. | # Create a folder for your controller. | ||

#* '''Recommended:''' [Avatar] > Expressions > Controllers | |||

# Right-click the folder. | # Right-click the folder. | ||

# Create > Animator Controller. | # Create > Animator Controller. | ||

# Name it something useful, such as ''Avatar FX''. | # Name it something useful, such as ''[Avatar] FX''. | ||

# Open it by double-clicking on it. | # Open it by double-clicking on it. | ||

You are now presented with a flow graph. To move around, use middle-mouse, and zoom with your scroll wheel. | You are now presented with a flow graph. To move around, use middle-mouse, and zoom with your scroll wheel. | ||

You can think of an Animation Controller like a flow chart: You start at the ''Entry'' when the avatar is loaded, and in most games, ''Exit'' would exit the animation controller. (VRChat will just loop back to Entry instead.) | You can think of an Animation Controller like a flow chart: You start at the ''Entry'' when the avatar is loaded, and in most games, ''Exit'' would exit the animation controller. (VRChat will just loop back to Entry instead.) ''Any State'' allows you to link to nodes from any other part of the graph. So, for instance, you could be in the middle of sitting down and still be able to trigger states from stuff linked to ''Any State''. | ||

=== Adding Parameters === | |||

''Parameters'' allow you to trigger animations based on what is currently going on in terms of avatar movement, positioning, speech visemes, gestures, or even whether your headset is on or off. | |||

Parameters are provided as named variables, and are case-sensitive. For example, <code>AFK</code> will work, but not <code>afk</code>. A list of built-in parameters are listed below. | |||

{| class="wikitable" | |||

|+Built-in parameters | |||

!Name | |||

!Description | |||

!Type | |||

!Sync | |||

|- | |||

|<code>IsLocal</code> | |||

|Is the avatar being tested locally? | |||

|Boolean | |||

|None | |||

|- | |||

|Viseme | |||

|Oculus viseme index (0-14). Jawbone/flap range is 0-100, indicating volume | |||

|Int | |||

|Speech | |||

|- | |||

|GestureLeft | |||

|Gesture from left hand control (0-7) | |||

|Int | |||

|IK | |||

|- | |||

|GestureRight | |||

|Gesture from right hand control (0-7) | |||

|Int | |||

|IK | |||

|- | |||

|GestureLeftWeight | |||

|Analog trigger from left hand control (0.0-1.0) | |||

|Float | |||

|IK | |||

|- | |||

|GestureRightWeight | |||

|Analog trigger from right hand control (0.0-1.0) | |||

|Float | |||

|IK | |||

|- | |||

|AngularY | |||

|Angular velocity on the Y axis | |||

|Float | |||

|IK | |||

|- | |||

|VelocityX | |||

|Velocity on the X axis (m/s) | |||

|Float | |||

|IK | |||

|- | |||

|VelocityY | |||

|Velocity on the Y axis (m/s) | |||

|Float | |||

|IK | |||

|- | |||

|VelocityZ | |||

|Velocity on the Y axis (m/s) | |||

|Float | |||

|IK | |||

|- | |||

|Upright | |||

|How crouched you are, 0-1 | |||

|Float | |||

|IK | |||

|- | |||

|Grounded | |||

|If you're touching ground | |||

|Bool | |||

|IK | |||

|- | |||

|Seated | |||

|If you're in station | |||

|Bool | |||

|IK | |||

|- | |||

|AFK | |||

|If player is unavailable (HMD proximity/End key) | |||

|Bool | |||

|IK | |||

|- | |||

|Expression1 | |||

|User defined. 0-255 if Int, -1 - 1 if Float | |||

|Int/Float | |||

|IK or Playable | |||

|- | |||

|... | |||

| | |||

| | |||

| | |||

|- | |||

|Expression16 | |||

| | |||

| | |||

| | |||

|} | |||

Revision as of 04:19, 10 August 2020

Expressions are what replace emotes and gestures in Avatar 3.0. They are significantly different than in Avatar 2.0.

The way emotes and gestures worked previously, one would have a custom animation that would override another. For example, if one wanted to bind an animation to the thumbs-up gesture, one would use an override controller to replace the THUMBSUP animation with the custom one. While simple, this created problems, which Avatar 3.0 tries to fix with a more complex, yet accessible system.

In Avatar 3.0, much more of the underlying Unity animation systems are exposed, such as the controller and node graph. Animations are placed in playable layers, so that animations can be blended together, and be affected by priorities.

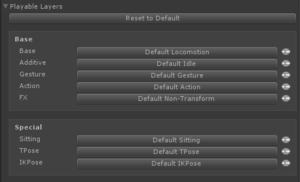

Playable Layers

Base

The base layer affects how your avatar looks like walking, jumping, etc. Generally, you don't want to change this unless your avatar has problems in these specific cases.

Additive

Additive allows you to apply animations that blend with your base animations. One popular example is a breathing animation.

Gesture

This layer allows you define traditional hand gestures. This layer allows you to affect other limbs using a masking system (telling the animation system which limbs you wish to use). Body parts that are not masked off would continue doing normal (base + additive) animations.

This layer can also do non-humanoid animations, like ear-twitches.

Action

These animations completely override what the rest of your animations are doing, much like how the old gestures and emotes used to.

FX

Advanced animations, such as blendshape magic, can be done here. You should not use these for animating hand bones, etc.

Special

Sitting

Probably has to do with animations done while sitting?

TPose

Probably does stuff while in calibration/TPose?

IKPose

Probably does stuff while in calibration/TPose?

Components

VRCExpressionsMenu

This component is used to define a menu that is displayed on the wheel menu. These menus can be nested, and can contain buttons and controls.

VRCExpressionParams

Expression Parameter components allow you to define variables that can be adjusted by controls on the VRCExpressionsMenus. These variables can also trigger animations when they change and meet a set of conditions you define.

Tutorial

Making an Animation

- Ensure your Animation window/tab is visible. (Window > Animation > Animation or CTRL+6)

- I recommend dragging the tab next to your console tab to reduce clutter.

- Duplicate your avatar in the scene graph. (Right-click, Duplicate)

- Disable the original avatar so you don't break it by accident.

- Back up your project, just in case.

- Select the duplicate avatar in your scene graph.

- Open the Animation tab.

- Select Create.

- Save as desired, although it is recommended to save to a dedicated Animations folder with your avatar files.

- Modify the blendshapes and bones to taste.

- NOTE: You no longer have to do hand bone animations when modifying blendshapes.

- NOTE: For facial expressions or looping animations, make sure there's only two keyframes and they're one frame from each other.

- Once you're satisfied, delete the duplicate avatar.

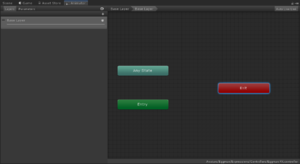

Creating your Controller

- Create a folder for your controller.

- Recommended: [Avatar] > Expressions > Controllers

- Right-click the folder.

- Create > Animator Controller.

- Name it something useful, such as [Avatar] FX.

- Open it by double-clicking on it.

You are now presented with a flow graph. To move around, use middle-mouse, and zoom with your scroll wheel.

You can think of an Animation Controller like a flow chart: You start at the Entry when the avatar is loaded, and in most games, Exit would exit the animation controller. (VRChat will just loop back to Entry instead.) Any State allows you to link to nodes from any other part of the graph. So, for instance, you could be in the middle of sitting down and still be able to trigger states from stuff linked to Any State.

Adding Parameters

Parameters allow you to trigger animations based on what is currently going on in terms of avatar movement, positioning, speech visemes, gestures, or even whether your headset is on or off.

Parameters are provided as named variables, and are case-sensitive. For example, AFK will work, but not afk. A list of built-in parameters are listed below.

| Name | Description | Type | Sync |

|---|---|---|---|

IsLocal

|

Is the avatar being tested locally? | Boolean | None |

| Viseme | Oculus viseme index (0-14). Jawbone/flap range is 0-100, indicating volume | Int | Speech |

| GestureLeft | Gesture from left hand control (0-7) | Int | IK |

| GestureRight | Gesture from right hand control (0-7) | Int | IK |

| GestureLeftWeight | Analog trigger from left hand control (0.0-1.0) | Float | IK |

| GestureRightWeight | Analog trigger from right hand control (0.0-1.0) | Float | IK |

| AngularY | Angular velocity on the Y axis | Float | IK |

| VelocityX | Velocity on the X axis (m/s) | Float | IK |

| VelocityY | Velocity on the Y axis (m/s) | Float | IK |

| VelocityZ | Velocity on the Y axis (m/s) | Float | IK |

| Upright | How crouched you are, 0-1 | Float | IK |

| Grounded | If you're touching ground | Bool | IK |

| Seated | If you're in station | Bool | IK |

| AFK | If player is unavailable (HMD proximity/End key) | Bool | IK |

| Expression1 | User defined. 0-255 if Int, -1 - 1 if Float | Int/Float | IK or Playable |

| ... | |||

| Expression16 |